S3 Push Delivery is a method that studios, fulfillment vendors, and other content providers can use to deliver files to Prime Video.

Amazon Simple Storage Service (S3) is an object storage service from Amazon Web Services (AWS). S3 Push Delivery allows partners to securely copy files from their own Amazon S3 bucket directly to Prime Video.

S3 Push Delivery offers several benefits, including:

- Lower cost: Transfers from a content provider’s S3 bucket to Prime Video using S3 Push Delivery will incur reduced AWS egress fees for content providers whose S3 buckets are hosted in North America and EMEA AWS Regions.

- Faster delivery speeds: Files delivered via S3 Push Delivery transfer up to 3x faster than those delivered via other bulk delivery methods.

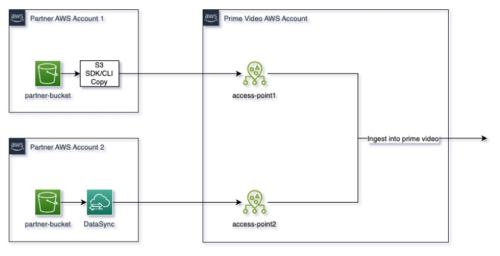

The following system diagram illustrates how files from two different partner AWS accounts move through a chosen file transfer method (S3 Copy or AWS DataSync) to an access point in a Prime Video AWS account, and are then ingested into Prime Video.

Get started

To receive and test an S3 access point where you can deliver your files:

- Raise a Contact us support ticket to request onboarding to S3, or contact your POM or CAM to raise this on your behalf. Please provide the following information in the request:

- AWS Identity and Account Management (IAM) roles that need access.

For example: arn:aws:iam:::role/this-is-a-test-role - Names of Prime Video Delivery Accounts that will be delivering on your behalf.

For example: test_account - AWS Region where your S3 bucket is hosted.

Your Amazon contact will then contact you to inform you of the next steps, and share the S3 access point to reference for your deliveries.

For example: arn:aws:s3:::test-s3-accesspoint-agsen8dehko1eyjxo3myhtui4cgs4use1b-s3alias - AWS Identity and Account Management (IAM) roles that need access.

- Update your IAM roles with the policies needed to copy your files to Prime Video’s S3 bucket via your access point. The following example shows the expected permissions. In this code:

- Replace the source_bucket name with your own S3 bucket name.

- Replace the destination_bucket name, destination_bucket_access_point_alias, prime_video_provided_prefix, and access_point_name with the information provided by your POM.

{ "Version": "2012-10-17", "Statement": [ { "Sid": "Statement1", "Effect": "Allow", "Action": [ "s3:GetObject", "s3:PutObject", "s3:ListBucket" ], "Resource": [ "arn:aws:s3:::source_bucket", "arn:aws:s3:::source_bucket/*", "arn:aws:s3:::destination_bucket", "arn:aws:s3:::destination_bucket/*", "arn:aws:s3:::destination_bucket_access_point_alias", "arn:aws:s3:::destination_bucket_access_point_alias/prime_video_provided_prefix/*", "arn:aws:s3:us-east-1:1231231231:accesspoint/access_point_name", "arn:aws:s3:us-east-1:1231231231:accesspoint/access_point_name/object/prime_video_provided_prefix/*" ] } ] }

After you’ve received your access point and updated your IAM role permissions, you can deliver files using one of the File transfer methods described in the next section.

- Via your chosen method, first deliver an initial test file (such as test.txt) and confirm that the file is visible in the Deliveries tab in Slate.

After confirmation, you are ready to begin delivering your content with S3 Push Delivery.

File transfer methods

We recommend using one of the following file transfer methods to deliver files from your S3 account.

S3 Copy (AWS Command-line interface, SDKs, REST API, Console)

For information about how to copy objects using the AWS Command-line Interface (CLI), SDKs, REST API, or S3 Console, see To copy an object in the AWS S3 User Guide. The AWS CLI Command Reference has details about how to execute various copy commands in the AWS CLI. For sample code and setup instructions for AWS Lambda and AWS CLI, see the S3 copy methods section below.

AWS DataSync

AWS DataSync is an online data movement and discovery service that simplifies data migration and helps you quickly, easily, and securely transfer your file or object data to, from, and between AWS storage services. To learn how to use AWS DataSync, see this tutorial.

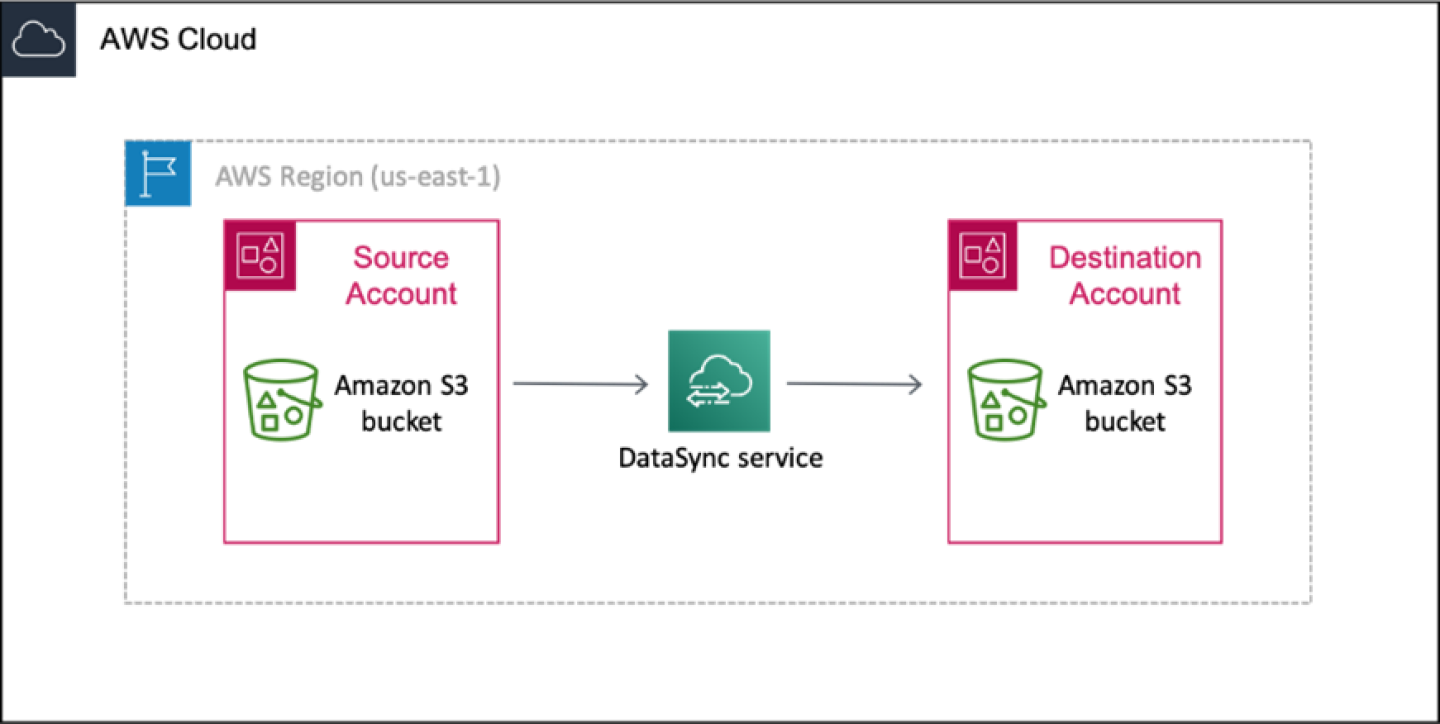

The following diagram illustrates a scenario where you transfer data from an S3 bucket to another S3 bucket that’s in a different AWS account, but within the same AWS region.

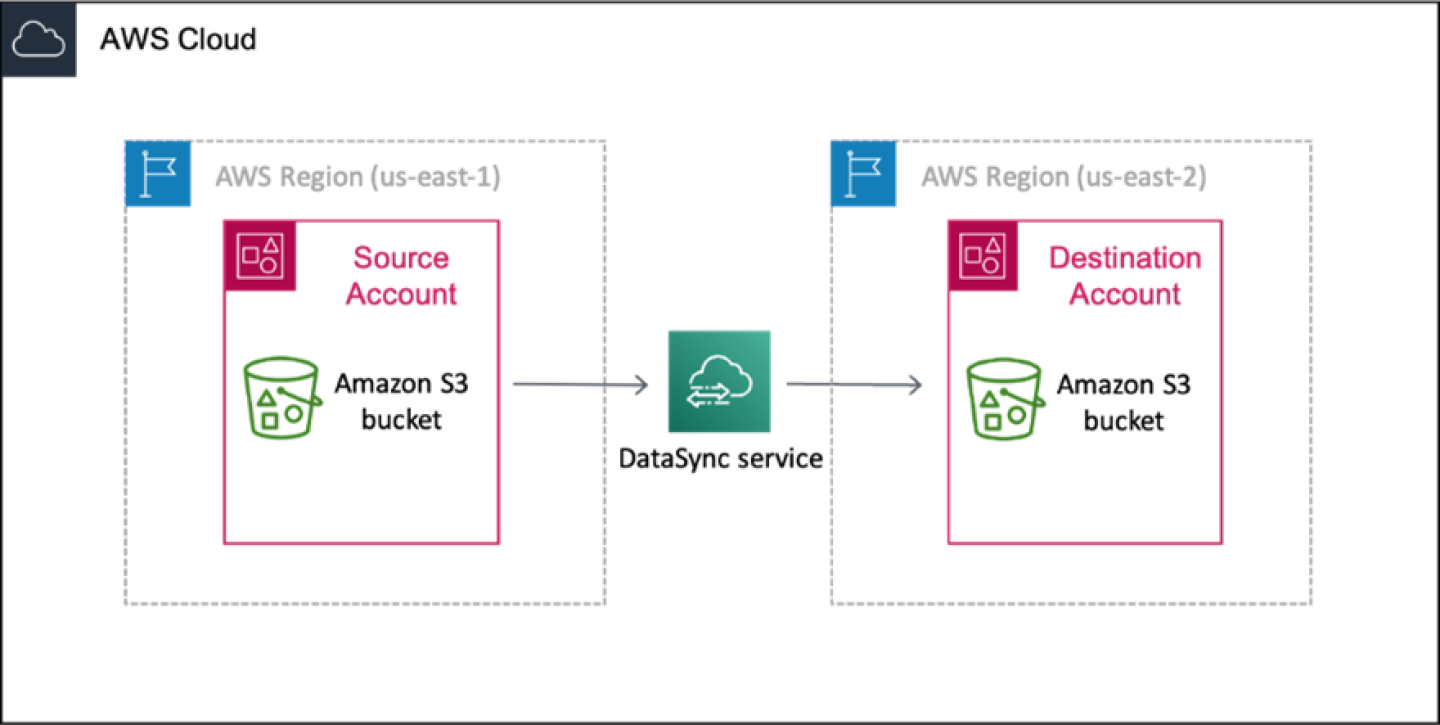

The following diagram illustrates a scenario where you transfer data from an S3 bucket to another S3 bucket that’s in a different AWS account and region.

S3 copy methods

This section provides two sample copy methods you can use with the AWS CLI.

Copy objects with AWS Lambda

- Create a Python lambda function in AWS. (This example was created in Python 3.12.)

- Use the IAM role you created and updated with the access point information from your POM.

- Use the following sample code to copy a file from the source to the destination S3 bucket.

import boto3 def lambda_handler(event, context): # Define your S3 bucket name and file key source_bucket_name = 'source_bucket' source_file_key = 'source_prefix/sample_source_file.txt' copy_source = {'Bucket': source_bucket_name, 'Key': source_file_key} # Create an S3 client s3 = boto3.client('s3') # Define your destination S3 access point ARN and file key destination_bucket_name = 'destination_bucket_alias ' destination_file_key = 'prime_video_provided_prefix/sample_destination_file.txt' try: # Copy the object from the source S3 bucket to the destination S3 access point s3.copy_object( CopySource=copy_source, Bucket=destination_bucket_name, Key=destination_file_key ) return { 'statusCode': 200, 'body': 'File copied successfully to destination S3.' } except Exception as e: # Print any errors that occur print(f"Error: {e}") return { 'statusCode': 500, 'body': str(e) } - Validate delivery by checking the Deliveries tab in Slate for the copied file.

Copy objects with AWS CLI

- Make sure the IAM role that you created has the statement2 with accountId added against AWS.

- Assume the IAM role that you created, using the following command.

[cloudshell-user@ip-192-168-1-1 ~]$ aws sts assume-role --role-arn "arn:aws:iam::XXXXXXXXXXX:role/PrimeVideoDeliveryIAMRole" --role-session-name AWSCLI-Session { "Credentials": { "AccessKeyId": "XXXXXXXX", "SecretAccessKey": "XXXXXXXX", "SessionToken": "XXXXXXXX", "Expiration": "2024-09-03T23:37:43+00:00" }, "AssumedRoleUser": { "AssumedRoleId": "XXXXXXXX:AWSCLI-Session", "Arn": "arn:aws:sts::XXXXXXXXXXX:assumed-role/PrimeVideoDeliveryIAMRole/AWSCLI-Session" } } - Copy credentials and set them as environment variables.

export AWS_ACCESS_KEY_ID=AccessKeyId_From_Above_Response export AWS_SECRET_ACCESS_KEY=SecretAccessKey_From_Above_Response export AWS_SESSION_TOKEN=SessionToken_From_Above_Response - Validate whether the role is assumed by the shell, by running the following code.

[cloudshell-user@ip-192-168-1-1 ~]$ aws sts get-caller-identity { "UserId": "XXXXXXXX:AWSCLI-Session", "Account": "XXXXXXXXXXX", "Arn": "arn:aws:sts::XXXXXXXXXXX:assumed-role/PrimeVideoDeliveryIAMRole/AWSCLI-Session" } [cloudshell-user@ip-192-168-1-1 ~]$ - Copy the file to the destination using the following command.

[cloudshell-user@ip-192-168-1-1 ~]$ aws s3api copy-object --copy-source source_bucket/source_prefix/sample_source_file.txt --key prime_video_provided_prefix/sample_destination_file.txt --bucket destination_bucket_alias { "CopySourceVersionId": "5IsrJMy42TJEa7wXSValghbqQZjfAdnW", "VersionId": "A6sYhr9q4iDJy7VtWv9iscJdRWF4xE8S", "ServerSideEncryption": "AES256", "CopyObjectResult": { "ETag": "\"ae2b1fca515949e5d54fb22b8ed95575\"", "LastModified": "2024-09-03T22:40:01+00:00" } } - Validate delivery by checking the Deliveries tab in Slate for the copied file.